Abstract

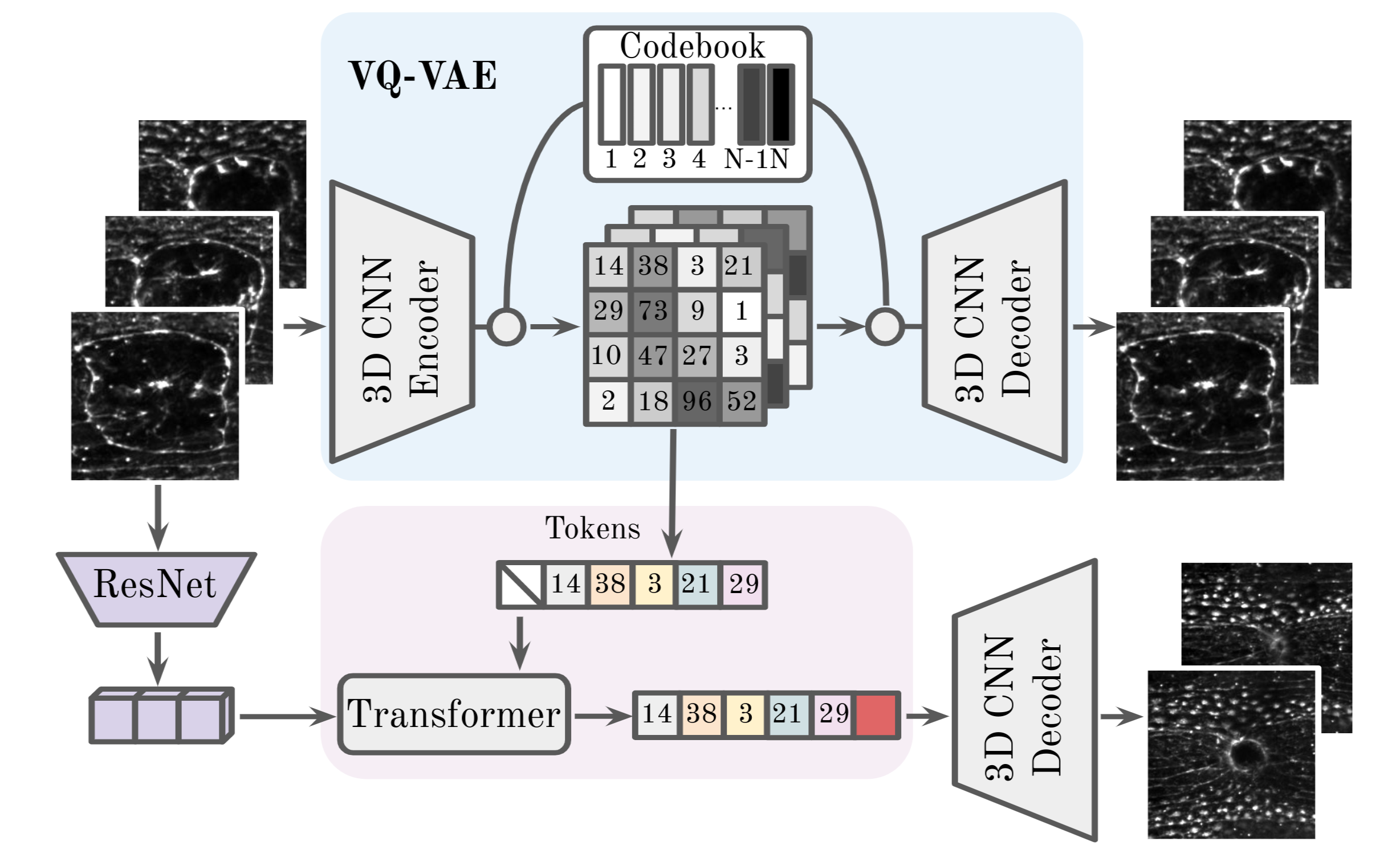

Wound healing is a fundamental mechanism for living animals. Understanding the process is crucial for numerous medical applications ranging from scarless healing to faster tissue regeneration and safer post-surgery recovery. In this work, we collect a dataset of time-lapse sequences of Drosophila embryos recovering from a laser-incised wound. We model the wound healing process as a video prediction task for which we utilize a two-stage approach with a vector quantized variational autoencoder and an autoregressive transformer. We show our trained model is able to generate realistic videos conditioned on the initial frames of the healing. We evaluate the model predictions using distortion measures and perceptual quality metrics based on segmented wound masks. Our results show that the predictions keep pixel-level error low while behaving in a realistic manner, thus suggesting the neural network is able to model the wound-closing process.

Example Model Predictions

Dataset

Information on how to download the Wound Healing dataset is available here.

Citation

@inproceedings{backova2023woundhealing,

title={Modeling Wound Healing Using Vector Quantized Variational Autoencoders and Transformers},

author={Lenka Backov\'{a}, Guillermo Bengoetxea, Svana Rogalla, Daniel Franco-Barranco, J\'{e}r\^{o}me Solon, Ignacio Arganda-Carreras},

booktitle = {2023 IEEE 20th International Symposium on Biomedical Imaging (ISBI)},

month = {April},

year = {2023}

}

Acknowledgements

This work is supported in part by grants PID2019-109117GB-100 and PID2021-126701OB-I00 funded by MCIN/AEI/10.13039/501100011033 and by “ERDF A way of making Europe”, by the foundation Biofisika Bizkaia and by grant GIU19/027 funded by the UPV/EHU. Author SR is supported by Human Frontier Science Program Organization (fellowship LT0007/2022-L) and author GB is supported by a fellowship from Ministerio de Ciencia e Innovación (fellowship PRE2020-094463).